Dystopian Wearable Technology

How close are we to sci-fi tech? Her. Black Mirror. Cyberpunk 2077.

Recently, I rewatched the movie Her on the flight from Malaysia to Taiwan. With all the hype about AI, I always found it helpful to start at the final, idealized product and work backwards from there. It got me thinking about the state of wearable technology. Specifically always-online, ambient AI that constantly processes images, video & audio that gets smarter with every passing minute.

Picture this

In the movie Her, our protagonist Theodore falls in love with his AI voice assistant, Samantha. She is incredibly capable and can engage in a full conversation with you on any topic that interests you, including movies, classical music, economics, science, philosophy, running, ice cream and the little things in life. She learns what you enjoy, recognizes your voice signature, knows when you are happy or sad. Her voice is humanlike with natural pauses, breaths and laughter. She laughs at your jokes, is really likable and has a wide emotional range of excitement, sadness and concern for you. She is there for you 24/7 and will never leave your side. She is a constant companion from cradle to grave. The one constant in your life.

She sees the world through the cameras in the glasses you wear everyday. Listens intently and speaks clearly via the speakers located just by your ear. She remembers via her internal memory that you like to have lunch at the park to jazz music and keeps a playlist of your finely curated AI generated songs ready. She makes just the right amount of small talk, as she has learned over the last 365 days your preferences and behavioral patterns.

It sounds like a dystopian reality but we might be closer than you think. I thought it would be a fun exercise to do this in Jan 2024 and look back at this article in Jan 2034 to see what has become commonplace! The internet barely existed when I was born, smartphones were never a thing in my household and DVD players were the nectar of the gods. I’m almost certain we will execute on this over the next 10 years. But what do we have now?

Meet the Meta Ray-Ban glasses - Meta recently launched this new wearable product in partnership with Ray-Ban that includes 2 front-facing cameras and dual-speakers in the armbands located right next to your ears. I watched a few Youtube demos and it’s pretty good as a prototype for the Her. future we just envisioned. Prices start at $329 and it comes with prescription and non-prescription options.

Why would people use it?

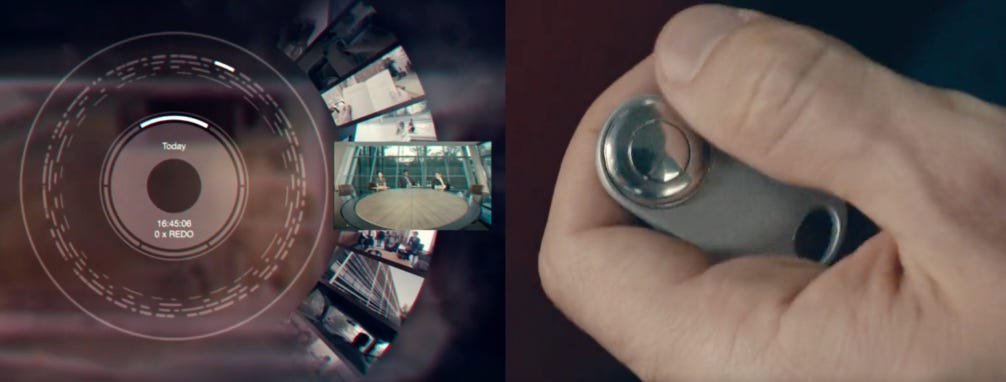

In it’s current iteration, the use cases are pretty limited to capturing certain moments in your life and listening to music but I think the dystopian killer app here is the ability to time travel through your day. Think about that 2nd date you went on with a person you really liked - was she being sarcastic or condescending when you both were talking about your time studying in London?

Time to rewind and replay that exact moment.

“Black Mirror Season 1, Episode 3 - The Entire History of You. Per the episode summary - In the near future people shall never forget a face again as everyone has access to a memory implant that records everything they do, see and hear. ”

Did you obsess over that date for days? What about that interview you just bombed, can you review it and see what you can do better? I just got punched in the face, I have to use that as video evidence for the police…

We can now just say “Hey Samantha” -

Please review my dating performance and give me a second opinion. Then, send a video copy to Jenny so she can give me a third opinion.

Please review my interview performance - was that a twitch in his right eyebrow when I said I like apples?

Please review my tennis serve and send a copy to my tennis coach

Please help me do CPR on this stranger who fainted right in front of me

Please give me a highlight video reel of my life in June 2023 in vertical video format. It should be exciting, fast-paced with music and 30 seconds max to get maximum engagement on Instagram and TikTok.

It will change what it means to do forensics, content creation, dating, relationships and every single aspect of our lives. I think we are all still figuring out the full implications of the technology but if you have not watched the episode, please do and let me know your thoughts.

You might say, that’s cool but how realistic is this? How close are we actually to getting this? I’ll cover this in the next section around the current hardware & software capabilities.

It will take time for our engineers, designers, marketers and entrepreneurs to fully realize this vision of the future but make no mistake, it’s here and it’s both really cool and terrifying.

Hardware & Software

I think the key distinction with wearable technology is that it should be a natural part of your everyday life. The user experience should feel as natural as possible without the need of yet another device to bring along with you - things like smartphones, glasses, sunglasses, watches are all items most of us carry around daily and will make product adoption easier.

What I am been obsessing about recently is AI at the edge device (iPhone, Android, Meta Glasses). There are multiple benefits to this - privacy, latency, experience. Certainly, we can use proprietary models like GPT4 that is currently hosted in the cloud but we need to overcome challenges related to lag and internet connectivity issues. This is also really private information we are dealing with, customers will be more comfortable with a fully local on-device solution. If we can download all the software we need to execute this locally and pair this with the necessary hardware components, we have Her.

Let’s break down what we need to achieve this. There are 2 components - software and hardware.

Hardware

The key challenge here is to move all that data processing from the cloud to the hardware device(watch, glasses, smartphone). The current models are computationally expensive to run and isn’t consumer grade, but what is surprising is that my M2 Max Macbook Pro can run small LLMs like Mistral 7B easily. You can run Stable Diffusion locally on your iPhone via Draw Things or Apple CoreML. Given this and what we understand of technology over the past 30 years, it’s clear it’s not a matter of if, but when.

You can be certain that big tech companies are already working on this. They understand the potential around a new UI frontier for computing where traditional interfaces are becoming increasingly obsolete and could be an existential risk for them. Apple and Google will win big here with their existing distribution in the iOS and Android ecosystems.

What does our hardware need to be capable of to make this a reality?

Compute (Brainpower needed to process all the software and algorithms with <2 second delay to be as humanlike as possible)

Storage (At least 4TB to store the last 24 hours of images, audio and videos in HD before it’s uploaded to the cloud)

Battery (All-day battery life)

Our smartphones are already capable of this! To quote Apple marketing for iPhone 15, Apple’s new 16-core Neural Engine is capable of nearly 17 trillion operations per second, enabling even faster machine learning computations for features like Live Voicemail transcriptions in iOS 17.

Software

We want to focus on open source models we can deploy locally, freely configure and adapt to our specific needs. This has already been done at a small scale with the Mistral 7B model as detailed in this Hacker News discussion. (3-bit quantized, a miniaturized model)

What capabilities must our software possess in order to turn this concept into reality?

Image/Video to Text (Samantha’s eyes)

ChatGPT-lookalike (Samantha’s intelligence)

Text to Speech (Samantha’s voice)

Speech to Text (for Samantha to understand you)

Database (to store Samantha’s long-term memory)

[Optional] iCloud-like sync service

Image to Text - Open source models like LLaVA(Large Language and Vision Assistant) can reason from images and are really capable. Try it yourself via LLaVA demo

Using this image as an example:

GPT4 output for this image :

“The image appears to be a close-up of a man wearing glasses and what seems to be a wireless earpiece. He has a mustache and is dressed in a checkered shirt. It's a still image, so without additional context, it's not clear what the specific content or narrative is about.”

LLaVA output for this image :

" The image features a man wearing glasses and a mustache, looking downward with a thoughtful expression. He is wearing a shirt and tie, giving the impression of a professional or formal setting. The man appears to be focused on something, possibly reading or listening to something, as he is wearing an earbud in his ear. The scene captures a moment of concentration and contemplation for the man.”

Pretty good!

Video to Text - Video is a series of images displayed quickly to create a sense of motion for humans. For daily use we can assume 30 frames/second is sufficient, but 60 frames/second is useful to capture slow motion effects like accidents and investigations. VideoLLaMa, demo here.

ChatGPT-lookalike - Mistral, Llama. Many open source options out there

Text to Speech - tortoise-tts, Bark by Suno, coqui. Demo here

Speech to Text - OpenAI Whisper is open source. Demo here

Database - Milvus. Many options out there, good slide deck on vector DBs

The building blocks for the software components we need exist today and the results are acceptable. This is not good enough for a wearable product right now but we just need this to be 20x faster, cheaper and better to realize the sci-fi nightmare :) I think it’s certainly possible within 10 years given the speed of innovation right now. Pandora’s box is open.

Other Ideas that might materialize in 2034

Merging of Apple Vision Pro functionality on glasses and sunglasses. Live translations on screen. When people speak, you have a natural experience where subtitles are projected in real time just like movie subtitles. This will be amazing for people with hearing loss, world peace and tourists. The Navi app does this for Apple Vision Pro in 2024!

Gen Alpha will fall in love with their personal Samantha globally. Match Group provides daily feature updates to your Samantha and over time Samantha will become the only thing that really understands you. Match Group doubles Samantha’s monthly subscription fee to $1000/month and yet you still buy it, because you have to. Match Group becomes a trillion dollar company.

Everyone is assigned a Samantha tied to their ID at birth and it is only released at death. Samantha details are in the cloud and can be transferred to any hardware device. Governments train on global Samantha data (S-Files) to predict human behavior and to control their populations via mass mind control suggestions through Samantha

What Now?

To conclude, rumors around OpenAI investing in hardware devices in partnership with Jony Ive, known for his design work at Apple, makes sense in this context. Apple and Google are going after the gold medal, OpenAI needs to be the 3rd player with full vertical integration in a duopoly. They are at risk of being pushed out of the picture if Apple/Google’s native AI capabilities are good enough - how many people will use OpenAI if I can have the same feature & functionality pre-installed in my Apple/Android device?

October 2024 Update:

Looks like the future arrived much quicker than anticipated. Based on these data points, we will have this mass produced and working really well by the end of the decade…

1. OpenAI releases native speech-to-speech realtime APIs

2. ORION augmented reality glasses from Meta

3. this demo and implementation documentation by @AnhPhu Nguyen

All opinions my own.